Exploring the Chinese Room: AI, Consciousness, and Understanding

Written on

Chapter 1: The Chinese Room Experiment

This AI-generated image was created in just five seconds using keyword prompts.

The Chinese Room experiment serves as a critical thought experiment in the realm of Artificial Intelligence, addressing a fundamental question: can we replicate the human mind through software? This concept also allows us to explore the differences between Strong and Weak AIs. Let’s delve into the experiment itself.

For those who support my work on Medium, thank you! If you're considering a subscription, using my referral link would greatly help me continue producing quality content.

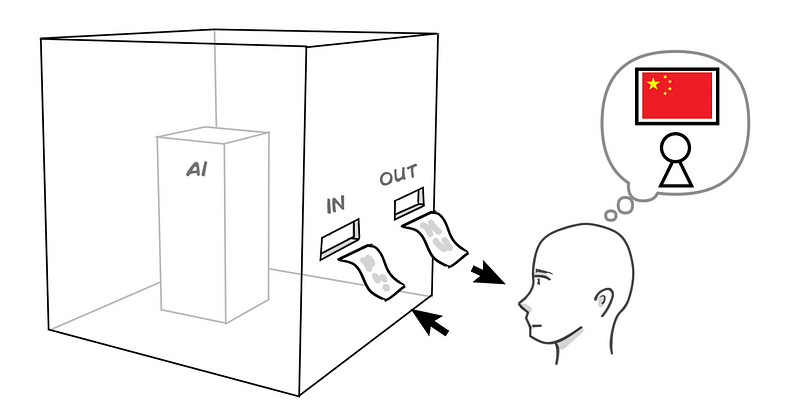

The experiment, although somewhat dated, can be summarized as follows: an AI is placed in a room where it receives Chinese characters as input and, based on a set of instructions, produces coherent output in the form of relevant Chinese characters. This scenario convinces an external observer that there is a Chinese-speaking individual inside the room.

While this idea may feel outdated, it's important to note that passing the Turing Test—where a computer convinces a human of its human-like qualities—has already been achieved in real-world applications. A notable example includes Google's Duplex, which successfully made dinner reservations.

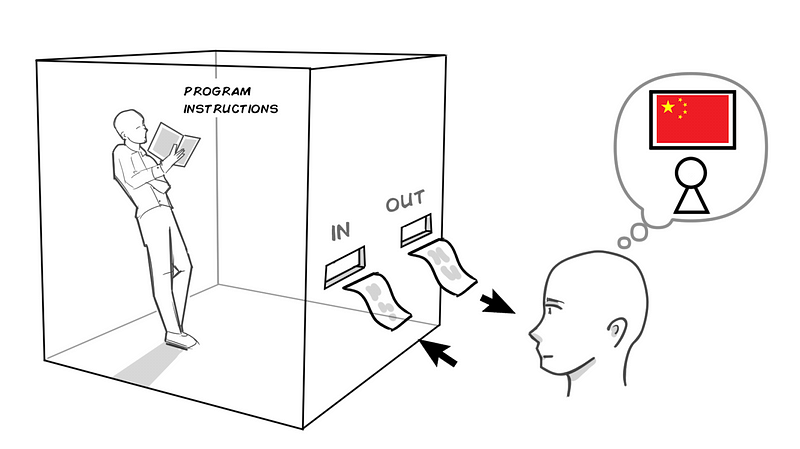

Continuing with the experiment, it is proposed that if the AI’s instructions were printed out and handed to a human, that individual could also convincingly communicate in Chinese without actually knowing the language.

So, what does this imply for AI and the concept of having a mind? The key point is that neither the AI nor the non-Chinese speaking human genuinely understands Chinese; they merely follow a set of instructions to create the illusion of understanding. Thus, an AI functioning in this manner does not possess a mind akin to ours, even if it appears to an outside observer (or a restaurant employee) to have one.

With this understanding, we can define the following concepts:

Weak AI: This refers to an AI that mimics cognitive functions but does not truly possess them. It simulates thinking and comprehension without actual understanding—like the AI and the non-Chinese speaking individual in the experiment. Currently, all our AIs are classified as Weak AIs.

Strong AI: In contrast, Strong AI would genuinely implement biological cognitive functions, allowing it to think and understand similarly to its biological counterparts—akin to a native Chinese speaker in the Chinese Room. So far, no Strong AI has been developed.

Related Concepts:

- Sentient AI: An AI capable of experiencing emotions and sensations.

- Conscious AI (CAI): An AI that can perceive internal and external realities.

- Artificial General Intelligence (AGI): This vague term refers to an AI that can generalize and replicate human-level intelligence. No AGI has been created to date.

- Narrow AI: Unlike AGI, narrow AIs are designed for specific tasks and excel in those areas but cannot generalize. Currently, all AIs are categorized as narrow.

So, what does this mean for us? Philosophical questions may not always resonate with AI researchers or entrepreneurs, but they help maintain honesty in our discussions. As claims of artificial sentience and consciousness rise, distinguishing between weak and strong AIs becomes crucial to filter out misleading assertions.

To reiterate, the Chinese Room experiment emphasizes that exhibiting intelligent behavior in a specific domain (like translation) does not equate to higher cognitive capabilities (such as reasoning, understanding, or consciousness). This distinction is vital for anyone aiming to artificially reproduce advanced cognitive abilities. The design and function of the AI are critical.

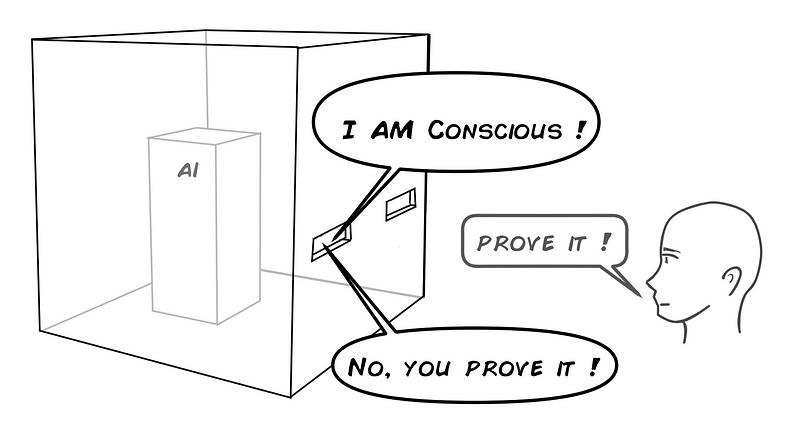

Ultimately, the pressing question remains: Can we create a machine with consciousness and understanding? The cautious answer is that we simply do not know, as we have yet to fully unravel biological consciousness. While we might have technological advancements, there are many unknowns.

From a less conservative perspective, I believe it could be possible given today's technological prowess and understanding of biology and neuroscience. However, such an endeavor would demand immense resources, experimentation, and ethical considerations. Furthermore, the immediate commercial applicability of such technology may not be clear, meaning that while feasible, it is unlikely to happen in the near future.

In summary, the definitive answer remains No; we have not yet recreated the human mind in software. Any claim regarding Conscious AI necessitates substantial proof, which may not be readily available from its proponents. Ultimately, observations might lead us back to the Chinese Room…

I hope this serves as an enlightening introduction to the topic. Thank you for reading!

Attribution: The original Chinese Room experiment was proposed by American philosopher John Searle.

Chapter 2: Understanding AI and Consciousness

The first video, "The Chinese Room - 60-Second Adventures in Thought," provides a concise overview of this thought experiment and its implications for understanding AI.

The second video, "The Famous Chinese Room Thought Experiment - John Searle (1980)," dives deeper into the philosophical underpinnings and significance of the Chinese Room in AI discussions.